¶ Aoraki GPU

¶ H100 GPUs (NVLINK)

-

Request access following the Aoraki documentation

-

Log in via ssh

ssh YourOtagoUsername@aoraki-login.otago.ac.nz

and setup ssh keys for passorwordless access.

- You can add this entry to .ssh config to make an alias and enable vscode to connect to the login node as a remote host.

Host aoraki

HostName aoraki-login.otago.ac.nz

User YourOtagoUsername

ForwardX11 yes

- Start interactive session on the H100 partition for testing

$ srun -v --partition=aoraki_gpu_H100 --ntasks=1 --gres=gpu:H100:1 --cpus-per-task=8 --time=10:30:00 --mem=100G --pty /bin/bash

You should find four nvlinked devices

$ lspci | grep -i nvidia

4e:00.0 3D controller: NVIDIA Corporation GH100 [H100 SXM5 80GB] (rev a1)

5f:00.0 3D controller: NVIDIA Corporation GH100 [H100 SXM5 80GB] (rev a1)

cb:00.0 3D controller: NVIDIA Corporation GH100 [H100 SXM5 80GB] (rev a1)

db:00.0 3D controller: NVIDIA Corporation GH100 [H100 SXM5 80GB] (rev a1)

To gain four of them in the interactive job, instead start with e.g. (increasing memory and cpus proportionately)

$ srun -v --partition=aoraki_gpu_H100 --ntasks=1 --gres=gpu:H100:4 --cpus-per-task=32 --time=10:30:00 --mem=400G --pty /bin/bash

-

Start julia (simplest is to install juliaup to manage versions).

-

To install and test CUDA in julia (note that correct NVIDIA drivers must be installed separately and managed by Aoraki technical support, currently Peter Higbee):

pgk>add CUDA

NOTE: At present there is a driver version mismatch, generating the following error:

julia> using CUDA

┌ Error: You are using a local CUDA 12.4.0 toolkit, but CUDA.jl was precompiled for CUDA 12.1.0. This is unsupported.

│ Call `CUDA.set_runtime_version!` to update the CUDA version to match your local installation.

└ @ CUDA ~/.julia/packages/CUDA/htRwP/src/initialization.jl:128

This has to be manually corrected once, triggering a recompile upon restart of julia:

julia> CUDA.set_runtime_version!(v"12.1.0")

\[ Info: Configure the active project to use CUDA 12.1; please re-start Julia for this to take effect.

julia>exit()

$ julia

julia> using CUDA

Downloaded artifact: CUDA_Runtime

Precompiling CUDA

2 dependencies successfully precompiled in 48 seconds. 65 already precompiled.

- Test CUDA. If you have threds enabled this will run multiple tests in parallel. You can also start julia with

julia -t autoto get as many threads as cores (avoid hyperthreading).

pkg>test CUDA

This should run with ~99% of tests completed successfully (There are a lot of tests, and it is common for a couple of tests to error due to different hardware support).

You can query card usage by starting another interactive job and running

$ nvidia-smi -l 1

Note that the CPU on this partition is quite fast:

$ lscpu

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Address sizes: 46 bits physical, 57 bits virtual

Byte Order: Little Endian

CPU(s): 112

On-line CPU(s) list: 0-111

Vendor ID: GenuineIntel

Model name: Intel(R) Xeon(R) Platinum 8480+

CPU family: 6

Model: 143

Thread(s) per core: 1

Core(s) per socket: 56

Socket(s): 2

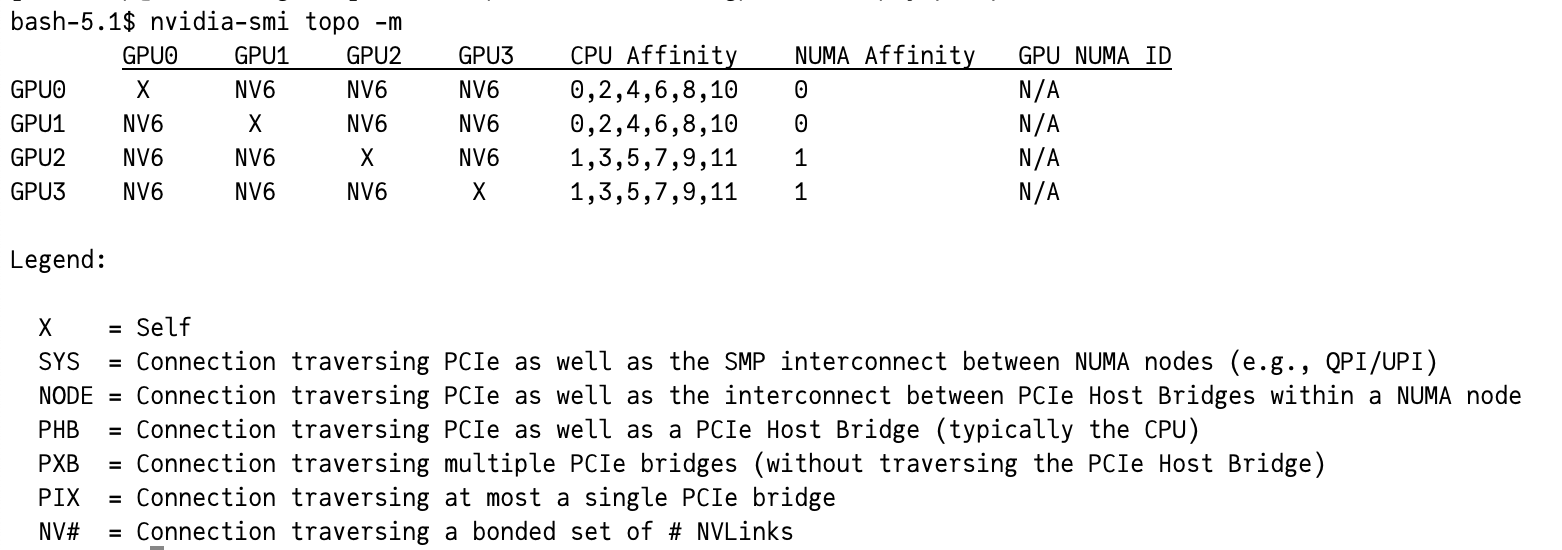

¶ NVLINK

Seems to be active (need a good test)

bash-5.1$ nvidia-smi topo -m

¶ DiffEqGPU

Examples are sourced from DiffEqGPU.

Do the DiffEqGPU.jl tests pass?

pkg> add DiffEqGPU

pkg> test DiffEqGPE

Simple test problem to check GPU functionality

using OrdinaryDiffEq, CUDA, Adapt, LinearAlgebra

import CUDA.cu

cu(x)=adapt(CuArray,x) # Float64 for precision

u0 = cu(rand(1000))

A = cu(randn(1000, 1000))

f(du, u, p, t) = mul!(du, A, u)

prob = ODEProblem(f, u0, (0.0, 1.0))

sol = solve(prob, Tsit5())

Using this Float32 example, we have a test ensembleproblem

using DiffEqGPU, OrdinaryDiffEq, StaticArrays, CUDA

function lorenz(u, p, t)

σ = p[1]

ρ = p[2]

β = p[3]

du1 = σ * (u[2] - u[1])

du2 = u[1] * (ρ - u[3]) - u[2]

du3 = u[1] * u[2] - β * u[3]

return SVector{3}(du1, du2, du3)

end

u0 = @SVector [1.0; 0.0; 0.0]

tspan = (0.0, 10.0)

p = @SVector [10.0, 28.0, 8 / 3.0]

prob = ODEProblem{false}(lorenz, u0, tspan, p)

prob_func = (prob, i, repeat) -> remake(prob, p = (@SVector rand(3)) .* p)

monteprob = EnsembleProblem(prob, prob_func = prob_func, safetycopy = false)

sol = solve(monteprob, GPUTsit5(), EnsembleGPUKernel(CUDA.CUDABackend()),

trajectories = 10_000)